FROM FANS TO FLUIDS:

THE CRITICAL NEED FOR LIQUID COOLING IN AI DATACENTERS

Forget Moore’s Law. The artificial intelligence tsunami has brought a new growth phenomenon — a doubling of compute of every six months — and withit unprecedented strain on data center infrastructure cooling.

Between training models with potentially hundreds of billions of parameters and providing cloud-based AI services based on those models around the globe, power demands on datacenters adopting AI workloads have exploded and show no sign of slowing. The 5 to 20 KW per rack envelope common in datacenters for the last 20 years is now giving way to 30 KW and even 45 KW-per-rack configurations to support ultra-dense CPU compute demands and AI GPU Racks drawing up to 120KW per rack.

Not surprisingly, AI datacenters now face the limits of air cooling. A server rack requires about 1000 CFM for every 10 KW of energy draw. Imagine a modern AI rack requiring the constant airflow of 40 hair dryers on full blast.

Wait, there’s more. Figure in advances such as NVIDIA’s 1200W GB200 Superchip (Blackwell architecture) compared to last year’s 700W Hopper — a nearly 60% jump per chip. Then expect processors like Intel’s Emerald Rapids family, which already features SKUs with 64 cores and a 385W TDP per socket.

Now multiply those numbers across a full rack. Then dozens of racks.

One megawatt used to be sufficient to power 30 to 50 racks, but with today’s AI workloads, that number drops to ten. We are already seeing fully configured racks drawing 70 to 100 KW.

Heat threatens to roast datacenters’ ability to support AI. Fortunately, Hyve and liquid cooling have the means to douse this threat and keep datacenters scaling.

WHY LIQUID WILL WIN

IBM designed calibrated vectored cooling (CVC) for dense blade server systems back in 2005. CVC uses fans and ducts to blow cool air across the hottest system components. By targeting these components, CVC is more efficient than traditional air cooling, resulting in fewer total fans and higher efficiency.

Similarly, free cooling, which relies on exchange of datacenter heat with the outside environment, showed promise a decade ago when Intel championed the approach. But even Intel’s most optimized approach showed a maximum capacity of 43 KW per rack, and that was long before generative AI and large language models (LLMs).

Put simply, even the most forward-looking air-based approaches to datacenter cooling won’t handle tomorrow’s AI needs. But water will.

According to power quality firm CTM Magnetics, “water is 23.5 times more efficient in transferring heat than air. Further, given a specific volume of water flow over a hot component (the volumetric flow rate), water has a heat carrying capacity nearly 3,300 times that of air.”

Naturally, this doesn’t mean water can carry 3300 times as much heat as air. Water boils at 100° C, or 212° F, which puts an upper cap on possible heat gains in the liquid state. Currently, most liquid cooling systems operate below 70° C for safety reasons and to prevent softening of the plastic and rubber components in the system. (A datacenter could opt to set fan speeds too low to save energy and inadvertently cause such melting. Experienced integrators like Hyve know to build in fail-safes able to override such events and revert to safe fan speeds.) Many datacenters don’t want their liquid cooling temps exceeding 45° C, although some tolerate up to 60° C if there’s sufficient flow and pressure to support it.

The headline benefit with liquid cooling is energy savings, as liquid systems deliver a net reduction in fan use and usually ambient environment air conditioning. The less-appreciated benefit with liquid cooling is compute performance improvement. Modern CPUs and GPUs have an opportunistic “turbo” mode wherein the processor defaults to a moderate speed that supports the current compute mode at a relatively low power draw. When more performance is possible, the chip shifts up into a turbo state representing the processor’s maximum official performance (without overclocking). In other words, a so-called 3.6 GHz CPU might spend most of its life running at 2.8 GHz to save power, only reaching 3.6 GHz for short bursts, even just a few milliseconds.

However, turbo modes often throttle from temperature limits. A datacenter’s policy might be to run CPUs five degrees below their maximum allowed threshold to save power and keep fans in a lower RPM state. But that temperature ceiling also caps the chip’s ability to reach far into turbo mode. Our 3.6 GHz CPU, when saddled with temperature restrictions, might only reach 3.6 GHz for less than 1% of its life.

We know that the liquid cooled GPUs only warms by about 13° Celsius. An 80° C GPU on a 45° C coolant loop will drop to 57° C. We also know that for every 10° C you drop a processor’s temperature, that chip’s power consumption drops about 4 percent. Thus, that 57° C GPU on liquid cooling will avoid throttling and so reach its true factory turbo speed potential 100% of the time, while simultaneously consuming over 8% less power than in its regular run state. Net processor speed increases on liquid cooling without overclocking could span from 10% to 25% or even more.

Liquid cooling is like a cheat code for datacenter performance and power savings.

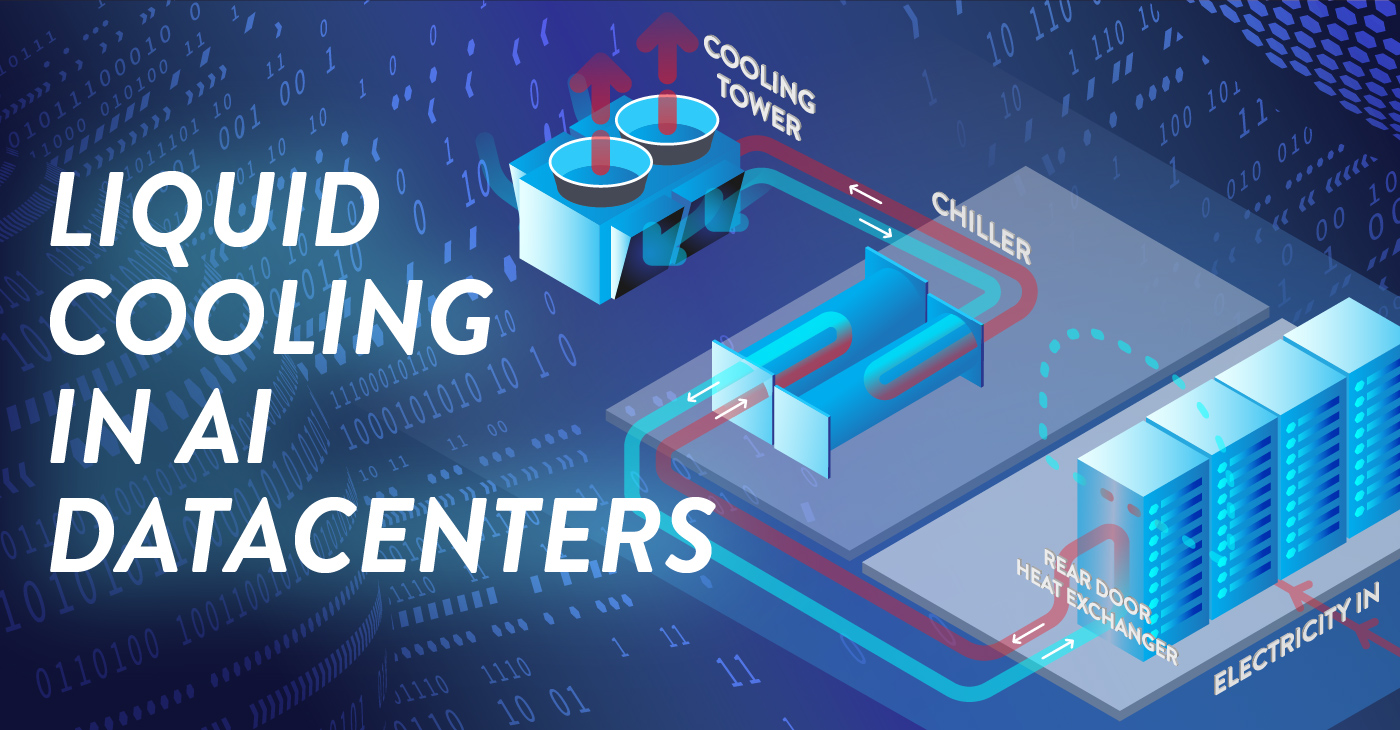

IMPLEMENTING LIQUID

Datacenter liquid cooling systems pump fluid through a loop, passing cooled liquid from device to device (e.g., CPU to GPU to MOSFETs) within a server and then to a manifold. The fluid warms by a few degrees as it passes across each hot device. Accumulated heat in the fluid returns toward the pump and passes through a heat exchanger to transfer into the facility water, and then eventually to the ambient environment, such as chillers, external radiators, or evaporative cooling towers. As you would expect, performing this radiation inside the datacenter requires more power and infrastructure for computer room air conditioning (CRAC). Radiating heat outside potentially requires longer heat paths and additional equipment, but it also might allow datacenters to turn off chillers in the cold of winter.

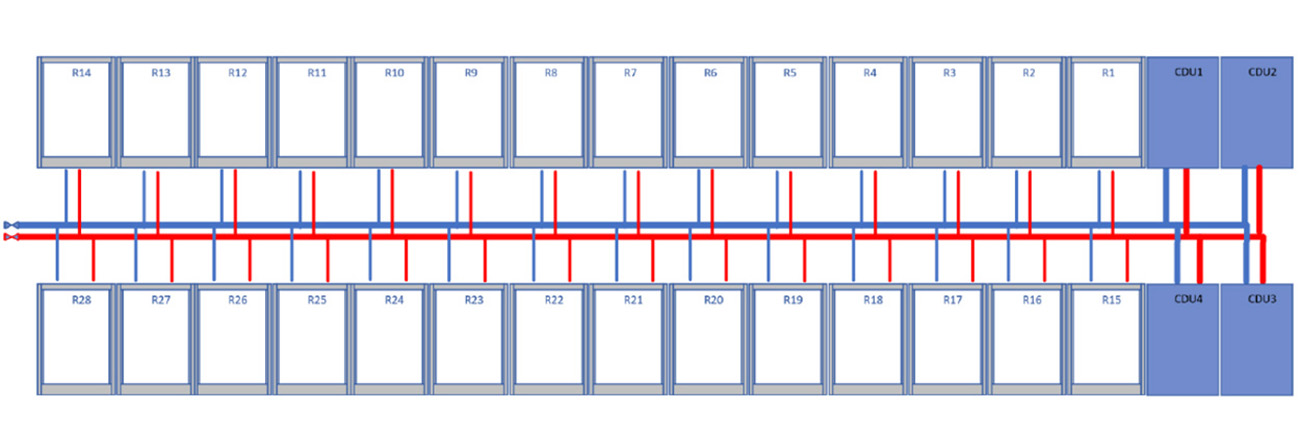

Cooling can be done either within or outside a server rack, and there are strengths to each approach. Both models typically use a coolant distribution unit (CDU) to remove heat from the rack’s liquid loop and either pass that heat to another liquid loop or let it radiate into the air. Quality CDUs can perform a range of monitoring and alerting functions as well as dynamically adjust flow rate for both the rack and facility (where applicable) to maintain certain temperature targets for optimal efficiency.

One typical advantage of an in-rack CDU over a row-level unit is fewer required changes to sever design. You can have two (or more) CDUs in a rack for redundant failover.

On the other hand, in-rack liquid cooling comes with its own potential downsides. Servicing in-rack units requires disconnection from the manifold, sliding out of the rack, and disassembly. (Blind mate liquid cooling connection system can alleviate this issue.) Open-cabinet coolers, on the other hand, need little more than opening the door to reveal redundant systems already running. Simply pull and replace the failed parts.

Liquid cooling systems can use a range of coolant types, but the most common is called PG25, a mix of 25% propylene glycol and roughly 75% water with traces of other additives to prevent corrosion and mold. The mixture is also sufficient to safeguard against freezing in the face of a deep winter power outage, because the last thing anyone would want is for the power to come back on with ice in the coolant tubes. PG25 is safe enough that some jurisdictions allow companies to pour it down the drain. Quality CDUs incorporate both filters for particulates and tanks designed to catch air bubbles — again, to preserve proper circulation and prevent heat spikes caused by fluid gaps.

Understandably, one of the top concerns voiced by those new to liquid cooling is the fear of spills. Fortunately, industry standard connections have valves that close before the outer seal is released, meaning perhaps a drop or less might actually be lost during an opening. This, combined with ongoing minimal evaporation, is why systems need occasional replenishing. Most people are surprised to find how little fluid is actually used in cooling systems — often between one and two gallons per rack. A system replenishing might add only half of a gallon of coolant for every 1000 racks.

Liquid cooling won’t eliminate the need for system- and rack-level airflow. Cooling plates on hot components still radiate heat. Some configurations might get away with having no fans inside of servers while some still need a few, but the ambient environment will need to be kept within thresholds. All told, liquid cooling can reduce total fan power by up to 80 percent.

A BOOMING NEED FOR BETTER COOLING

In some sectors, AI is the environmental bogeyman du jour. As with the Bitcoin network, datacenters, air travel, and even Christmas lights, AI draws fire for its seemingly apocalyptic energy use. Whether such energy use is justified and whether that energy comes from largely renewable/stranded/waste sources are separate discussions, but eye-popping comparisons remain. One recent study determined that AI servers could use 85 to 134 terawatt hours (TWh) annually by 2027, on par with the yearly consumption of Argentina, the Netherlands, or Sweden. (Note that the study’s author and his methods have faced substantial criticism.)

Sound or flawed, such analyses continue to attract headlines, and energy consumption remains a valid, serious concern. Larry Fink, CEO of asset management titan BlackRock, recently remarked at a World Economic Forum meeting, “To properly build out AI [datacenters], we’re talking about trillions of dollars of investing. Datacenters today can be as much as 200 megawatts, and now they’re talking about datacenters are going to be one gigawatt. That powers a city. One tech company I spoke to last week said right now all their datacenters are about five gigawatts. By 2030, [because of AI needs,] they’ll need 30 gigawatts.”

According to CNBC, citing Wells Fargo, “AI data centers alone are expected to add about 323 terawatt hours of electricity demand in the U.S. by 2030,” up 20% from present levels. Not least of all, Goldman Sachs predicts that AI expansion will yield “a 15% CAGR in data center power demand from 2023-2030, driving data centers to make up 8% of total US power demand by 2030 from about 3% currently.”

We’ve discussed how liquid cooling can help lower AI datacenter power consumption and costs while significantly improving server performance. Those benefits will only magnify as they track on U.S. and global datacenter growth rates. This analysis aims at AI, but it also applies to all manner of hyperscaler datacenters. A Research and Markets report forecasts that datacenter liquid cooling growth will maintain a 24.53% CAGR over the coming decade, “driven by increased data center spending and reduction in operational costs for data centers.”

Many organizations are now ready move from understanding the benefits of liquid cooling to implementing the technology, especially for AI applications. Those wanting a deeper dive on why and how to add liquid cooling to their own hyperscale operations should contact their Hyve representative for more information.